A couple of weeks ago I worked with a client that had this requirement for their search center in SharePoint Online. They had a repository with different research documents and these documents had a Publishing Date date/time field with values up to 30 years ago.

The client wanted to build a result page for this documents and have a slider bar refiner with custom intervals up to 10 years ago.

If we have a numeric based managed property we can specify a custom refiner interval like the one below.

Unfortunately the Custom option is missing for date and time datatype. We have predefined intervals that are up to one year ago and "Defined in search schema" which I am not sure what is suppose to mean, but this will be the error you will get if you select this option.

For this Display Template you must specify custom intervals for the values that will be shown. Please change the refinement settings to use custom intervals.

It really does not tell us much if you don't have an option to specify custom interval in the UI.

Luckily if you export the Refinement webpart you can see more refiner settings. All selected refiners are represented as JSON and below are the settings of our Publication Date refiner(formatted).

There are two settings that grab the attention and they are highlighted in the picture above. They are "useDefaultDateIntervals", which obviously means if the default intervals that cover only one year should be used and "intervals" that should represent the custom intervals. After some research on the web I found that the intervals value should be array of integers that are representing the intervals in days. I came up with these intervals for my client: Ten Years Ago, Five Years Ago, Three Years Ago, One Year Ago, Six Months Ago, Three Months Ago, One Month Ago, 7 days Ago and Today. This will be set with flowing intervals value:

[-3650,-1825,-1095,-365,-180,-90,-30,-7,0]

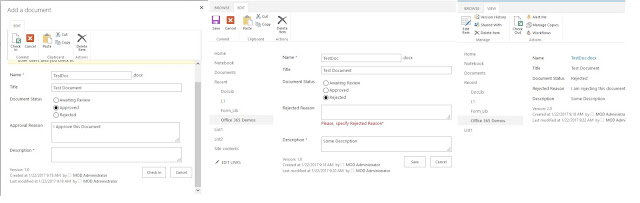

The first step will be to update the values for "useDefaultDateIntervals" and "intervals". Set the "useDefaultDateIntervals" to false and for "intervals" use your interval array like the picture below.

Then you will need to import the webpart and use it in your page. The result is below.

We have our custom intervals and they are working as we expect(at least with me). However we can see one big issue and it is that the intervals are not labeled appropriately. This should be fixed in the refiner display template.

As it is not a good practice or practical in this case to edit the out of the box display template I created a new display template based on the out of the box "Slider with bar graph".

In the new template I have specified values for the Label and the NextIntervalLabel of all "filter boundaries". In this example we are going to have 10 boundaries. NextIntervalLabel is used when you move the mouse over the bar and the Label is used for boundary label in the slider. You can see the entire template below.

On line 104 we can see how to get all boundaries and their values for Label and NextIntervalLabel.

After deploying and setting the new display template we can see that the labels are much more accurate.

There is small detail that should be updated and it is the start and end labels of the bar graph.

Unfortunately my solution to that is to change the text by selecting the elements by class name and this is not the most elegant solution if you have more than one slider bar refiners, in that case you will need to change the index number to get the correct elements. You can see the code below.

With this final touch this is how our custom slider bar graph refiner looks like.

It looks really cool and useful. If you check the refiner settings in the UI now you will see that "Defined in search schema" is selected. I found this misleading since I have done nothing special in the search schema.

I hope that this was helpful!